Data Types

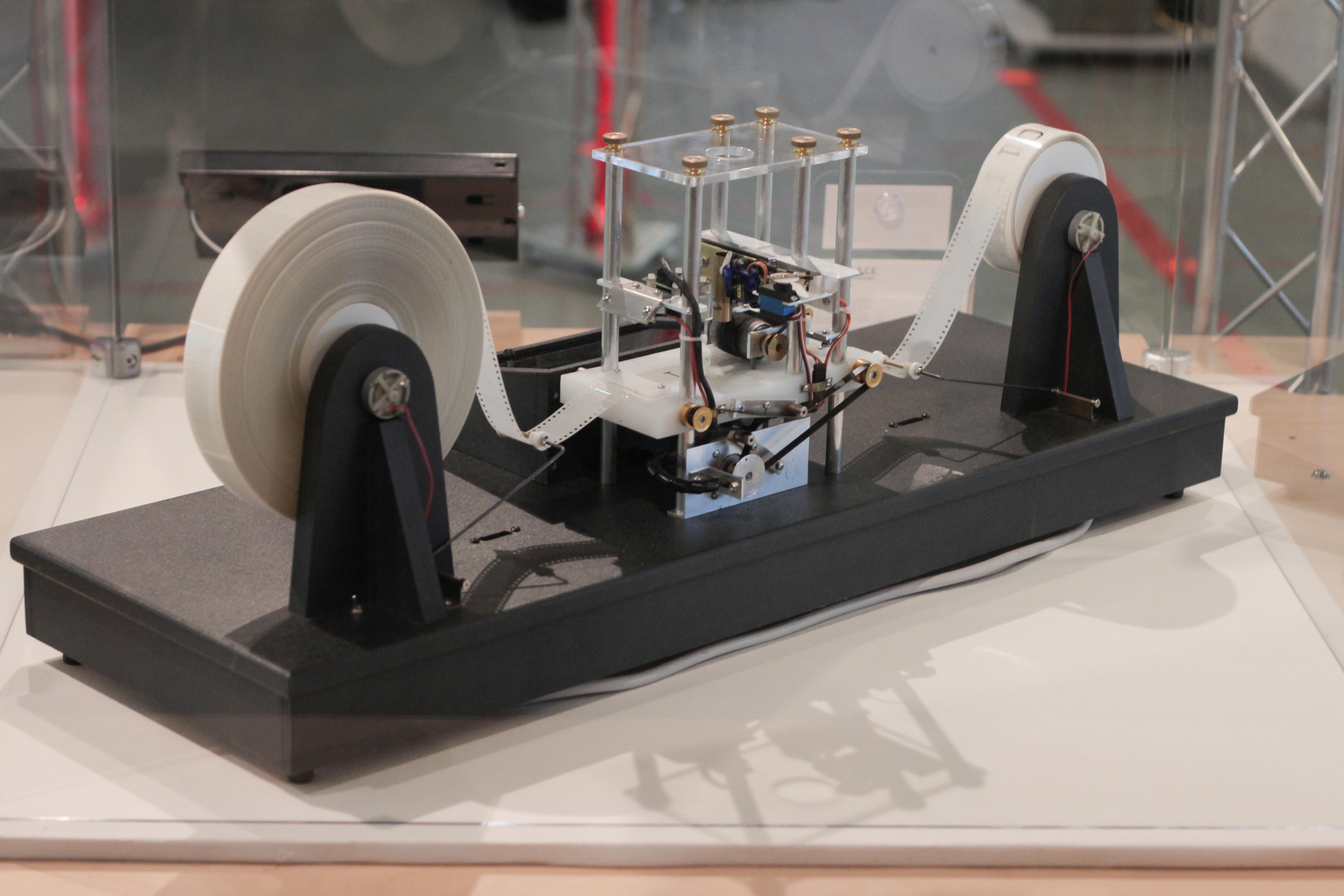

You may have heard of Alan Turing’s Universal Turing Machine (shown in Figure 1), which is able to theoretically compute anything, using two sets of infinite tape, and a read head, which is capable of performing several relatively simple operations. While it is in principle easy to construct a computer that is theoretically able to execute any arbitrary algorithm we desire (i.e. compute anything that a Universal Turing Machine can compute), we are often constrained by practicality.

CPU manufacturers design their chips to be able to perform algorithms as quickly as possible. One way in which this is achieved is by adding hardware support for common operations, such as adding two integers together or multiplying two floating-point numbers together. We will cover some of the most common hardware implemented data types below.

Unsigned Integers

As you will know, computers fundamentally represent data using binary (1s and 0s). We write our numbers in base 10 (decimal), but this is only a choice. We can equivalently write our numbers using other arbitrary bases, but the simplest is base 2 (binary). What we mean here can be shown by a diagram. Let’s take the number 42. In the decimal system, this number can be broken down into sums of powers of 10:

However, we could equally represent this number as multiples of powers of 2:

We say that we can write the number in decimal as in base 2. However, this number is just a series of 1s and 0s. We use a convention to interpret that the first column (right to left) is the 1s column, whereas the left most column is the column. Conventions are incredibly important both for humans and computers. In an instruction set, we need to rigorously define how data stored in binary format should be interpreted, as there are always choices that are somewhat arbitrary. For example, if you have 0 bits, do you interpret the digits right to left or left to right? This should be defined ahead of time. In order for the CPU to perform operations on the data, it must know how the data is organised in its binary format.

In most programming languages, integers default to being 32 or 64 bits (depending on the architecture of the platform). As we will learn in Julia, there are several data types to cover a range of sizes of unsigned integers:

UInt8 # 8 bits

UInt16 # 16 bits

UInt32 # ... etc

UInt64

UInt128The number of bits assigned to a number directly specifies the range of possible values that can be stored. In general, if you have bits, you can store possible distinct values. For unsigned integers, we can store the numbers from to inclusive.

Overflow and Underflow

As the range of values is limited, some arithmetic calculations can cause the result to be outside the capacity. When this value goes to be stored back inside the container, its value is truncated. We call this phenomenon ”overflow“.

As an example, if we take the 8-bit unsigned integer and put in the maximum value of 255 (11111111 in binary) and add 1, let’s see what we get:

x = UInt8(255);

y = UInt8(1);

println(Int(x + y))0You can see that 255 + 1 overflows to 0 when using only an 8-bit container, as this container cannot store 256, when gets truncated to 0.

You can also underflow, which can be shown with the example:

x = UInt8(2);

y = UInt8(5);

println(Int(x - y))253Notice that the result is not as expected, but in fact has underflowed into the value .

If you use 64-bit integers, you usually do not have to worry about overflow and underflow. However, if you are calculating numbers that are larger (i.e. calculating large factorials), you will usually get incorrect values as the container is not large enough to handle the default, but no errors will be thrown.

Note: Python’s integer is not strictly a machine integer. If you overflow the boundaries of a python integer, it will be converted from a machine integer into a variable size integer. This can be helpful when dealing with large numbers, but has significant negative performance implications. If you want this behaviour, you can use Julia’s BitInt type instead.

Signed Integers

Unsigned integers are useful for quantities that will never be negative (for example an index into an array). However, having a way to store negative numbers is incredibly useful, and is usually the default for most systems. The way computers achieve this is by having the first bit in the signed integer represent , where is the number of bits in the container. If we were to have a binary representation of a 4-bit signed integer as 1011, we can convert this into decimal using

This method of using the first bit to represent a negative number is called 2s compliment. This has the advantage of being able to use essentially the same circuitry to addition and subtraction. In fact, one can use 2s compliment to turn a number negative by flipping all bits and adding . This allows use to use addition instead of subtraction so that becomes .

A signed integer container with bits can store values from to . This container will also have issues of overflow and underflow. If you ever find a bug in your software where a strictly positive values suddenly flips to a negative sign, this is possibly an overflow error. You may find this error cropping up across systems in your day-to-day life as well. When Psy’s hit Gangnam Style was released to YouTube, its view count shot past 2,147,483,647 () views and flipped to a negative view count1. As such, YouTube has moved to using 64-bit integers instead of 32-bit integers to store the number of views.

Floating Point Numbers

There are international standards for how real numbers (those with decimal places) can be organised using a floating point approach. One such standard is the IEEE 754 which defines numbers like could be stored in binary. This format is very closely related to standard form notation (i.e. ).

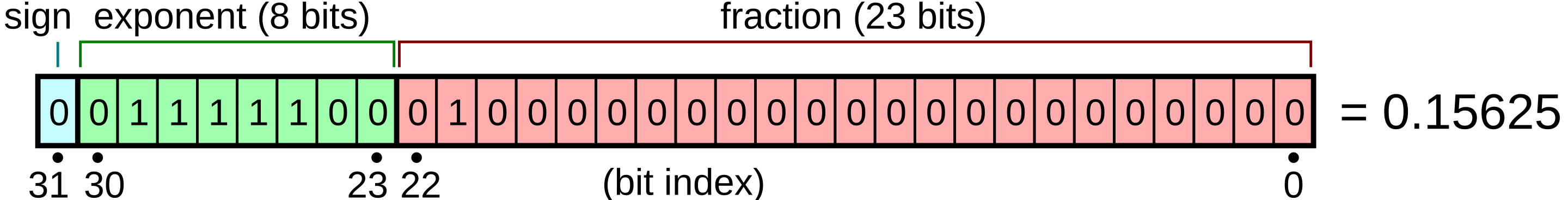

In Figure 2, we see a diagram where:

- The first bit is dedicated to storing the sign of the number (i.e. represents positive and represents negative).

- The next 8 bits are used to store the exponent (interpreted as an unsigned integer)

- The next 23 bits are used to store the “significant figures” of the number, where the first bit represents the 1s column, the next the s column, the s column etc.

This data type allows for an incredibly large range of values to be used, along with special reserved values for concepts such as NaN (Not a Number) or Inf. Using NaN allows us to represent results of operations such as .

Floating point values allow for a huge magnitude of values to be stored. For 64-bit floats, we can store values as small as around to those as large as around . It should be noted that there are only unique numbers available in this format, and as such, not all numbers can be represented.

Common Pitfalls

Floating points are probably the most common data types used in scientific computer (alongside integers), so it is important to understand their limitations. The first is that we are often required to truncate numbers to fit into a discrete number of bits. For example, when a computer adds together , the result is not the expected , but instead . These errors can also accumulate over time during a calculation. Floating point errors are incredibly common sources of bugs and should always be considered when the result you expect is almost correct, but off by a small amount.

In the scientific domain, many researchers opt to use 64-bit floating point numbers (sometimes called doubles), instead of 32-bit “floats”, which reduces the impact of floating point errors. It is even possible in some languages to use 128-bit floating point numbers as well. However, there is a performance cost incurred with these choices. If you use a 64-bit floating point number vs a 32-bit number, it will take up more space in memory. This also allows algorithms using a lower precision type to complete faster, usually with little effect on the output. We will investigate the performance implications of different data types later on in the course.

One common source of issues is adding together floating point numbers of vastly different magnitudes. If you add two standard form numbers together, you will likely have to adjust one, or both of the numbers so that they have the same exponent, before adding the significands together. As the original significands are truncated, you will inevitably lose some precision in this calculation. Let us take a very simple example of how this error might accumulate, using an example with random numbers. While we have not yet covered Julia’s syntax, this example should be easier to follow along.

function unbalanced_sum(numbers)

partial_sum = Float32(0)

for number in numbers

partial_sum += number

end

return partial_sum

endAbove, we define a function that has one variable which will we will use to store our partial sum. We set this equal to zero (a Float32) type. We then iterate through all numbers in the incoming array one at a time, and store the partial sum in the same variable.

numbers = rand(Float32, 2^28) .* Float32(10^(-8));

our_result = unbalanced_sum(numbers);

inbuilt_result = sum(numbers);Above, we create around 268 million () uniformly random Float32 numbers between and and sum them using our previously written function. We can predict the sum should roughly be around . We also use Julia’s inbuilt sum function as a comparison:

Our result = 0.25, Inbuilt Result=1.3421819, Expected Result ≈ 1.34217728

Relative Error: 81.4%Notice that the error using our inbuilt function is actually extremely large. It has a relative error of around when compared with the inbuilt function, which matches our expected sum. What you are observing is that floating point addition is not associate, i.e. floating point arithmetic usually implies

One should note that floating point arithmetic is usually commutative, such that

The result of Eq. (4) causes our custom sum function to be incorrect. This is one thing to watch out for in your algorithms. Additionally, you may get different end results if you sum up the same numbers in a different order. We will observe this in later chapters.

Question: Notice that the inbuilt sum function handled our large sum correctly. What do you think is different about its implementation that makes this result more accurate?

Boolean Values

Boolean values are simply true or false values. In most languages, this is represented as an -bit unsigned integer. This is because, on most systems, the smallest block of memory which can be addressed is a single byte2. We restrict these bits to be either all zeros (representing false) or a single in the trailing bit position to represent true. This is why often languages allow and to be interpreted as true or false respectively. This is also why arithmetic with booleans make sense, as true * 5==5 and false * 5==0.

Note that storing an array of booleans will likely take up more memory than you expect. In Julia, we can create an array of boolean values with

bool_vals = Vector{Bool}(undef, 64);

@show sizeof(bool_vals)sizeof(bool_vals) = 64Note that we have bytes or bits to store boolean values, which is not very efficient. This is why we can also use a BitVector which is designed for this purpose, to allow us to use the minimum amount of memory required (1 bit for each of the 64 booleans). The same array, converted to a BitVector has a size of

@show sizeof(BitVector(bool_vals))sizeof(BitVector(bool_vals)) = 8which gives bytes and bits, the expected amount.

Characters and Strings

Programs often need to be able to interpret text, which is usually stored as an array of characters (often called chars). Traditionally we used ASCII to represent characters such as the alphabet (lower and upper case), punctuation and numbers, along with a few other special characters. This used bits per character with a specific mapping from binary representation to character. For example, the character a is represented by the number or the binary representation 01100001. As the use of computers has grown, we have needed more and more characters. Most encoding schemes now use UTF-8 (Unicode Transformation Format) which allows for variable length encoding. Most text using the English language will revert to using standard ASCII encoding, but less common characters may use multiple bytes to represent a single character. This allows for a great deal of compression instead of extending each character to use or even bytes. UTF-8 is capable of encoding all possible valid character codes in the Unicode standard, including emojis!

A string is just a collection of characters, essentially like a special type of array. Due to UTF-8 encoding, it is often difficult to index into a string the same way one would index an array, since each character may use a variable number of bytes. Fortunately, the low level details are usually abstracted away from the programmer, but this should be kept in mind if you are iterating over a string by an index instead of character by character. If the encoded text is ASCII, with 1 byte per character, this is not an issue. Otherwise, you can find runtime errors if you try to index part way into a variable length Unicode character.

Summary

In many programming languages there are often many more varied data types that can be used. The ones shown in this section are usually the foundational types included in many languages. These types are usually called primitives and have hardware backed equivalents (i.e. dedicated circuitry to handle common operations such as arithmetic).

Footnotes

- https://www.exploringbinary.com/gangnam-style-video-overflows-youtube-counter/↩

- byte is equivalent to bits.↩